Coverage, Exploration & Motion Planning

This area studies how single robots and teams of robots explore, cover and move through unknown or partially known environments in a safe and efficient way. It spans coverage path planning, environment decomposition, multi-robot task allocation and exploration under uncertainty, as well as global and local path planning, sampling-based and search-based methods, trajectory optimization and obstacle avoidance in static or dynamic settings. In our work, this typically means planning missions for teams of aerial, ground and underwater robots that must map unknown terrains, scan large agricultural or natural areas, or inspect infrastructure with limited time and battery. Core challenges include guaranteeing sufficient coverage with minimal overlap, respecting kinematic and dynamic constraints, operating with limited communication and energy, handling perception noise, and achieving real-time performance and safe interaction with other agents in cluttered or unstructured spaces.

Publications

Solutions

Videos

Cooperative & Swarm Multi-Robot Systems

This area focuses on how multiple robots coordinate their behavior to achieve tasks more efficiently, robustly or at larger scale than a single robot. It spans multi-robot systems and swarm robotics, where large numbers of relatively simple robots follow decentralized interaction rules. In practice, we often study how heterogeneous teams of drones, ground vehicles and underwater robots can self-distribute, share information and allocate roles to perform missions such as wide-area monitoring, search or gas-plume tracking without relying on a single central controller. Key topics include distributed control, consensus, task and role allocation, formation control, information sharing and swarm-intelligence techniques, with main challenges involving scalability, fault tolerance, predictability of emergent behaviors, and operation under tight sensing and communication constraints.

Publications

Solutions

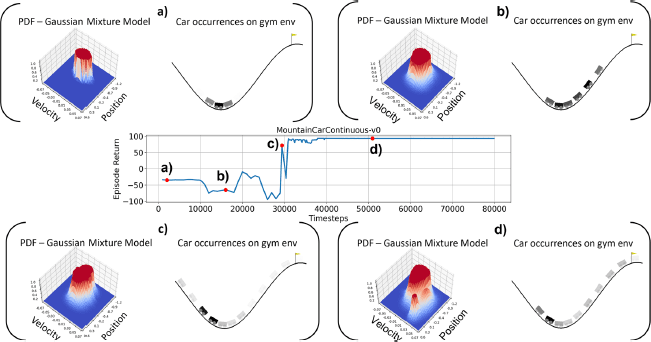

Reinforcement Learning & Learning-Based Control

This area uses reinforcement learning and related learning-based control methods to automatically synthesize robot policies from interaction data instead of hand-designed controllers. Research includes both single-agent and multi-agent settings, where autonomous systems learn to make sequential decisions directly from experience. In our work, this typically means enabling mobile robots to explore unknown terrains, coordinating teams of agents, or managing large networks of devices and vehicles (for example, for charging or resource allocation) in a data-driven way that remains robust enough for real-world deployment. Major challenges include sample efficiency, ensuring safety and stability during learning and deployment, handling partial observability, and making learned policies interpretable and reliable in realistic conditions.